Many voice AI providers highlight impressively low latency numbers, but those claims often measure only a portion of the processing pipeline, not the full end-to-end conversational delay users actually experience. This article breaks down what voice AI latency really is (the time from when a user finishes speaking to when the system begins responding), why partial measurements can mislead, and how true conversational latency should be measured and optimized in real-world deployments.

How to deliver low latency voice AI performance you can feel

Voice AI is everywhere right now. Demos are flashy, and everyone claims their bot "feels human." But once you start building, you hit a common wall: latency.

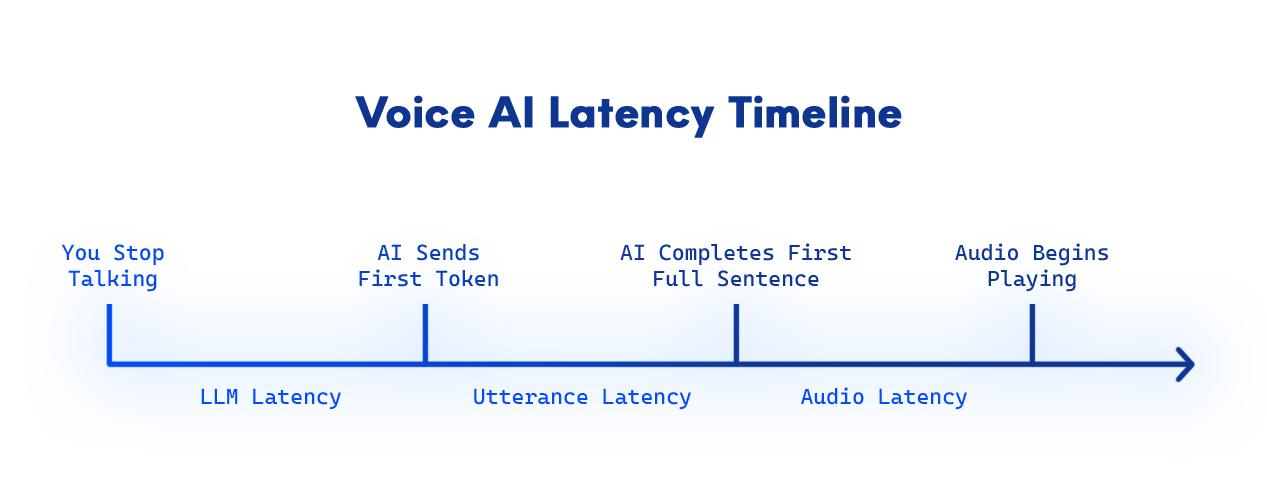

What is voice AI latency?

Voice AI latency is the total delay between when a user finishes speaking and when the AI starts talking back.

It includes every stage in the process, from network transit and speech recognition to LLM processing and text-to-speech playback.

The delay between when a person finishes speaking and when the AI starts responding is the #1 factor in how "real" a conversation feels. And most platforms fall short, while claiming to be even faster to respond than a human.

Here’s how a lot of voice AI providers trick you: they only measure one step of the process, when there are actually several steps involved, and call that "latency." This is how you’ll see claims of sub-100ms latency. They’ll pick the shortest part of the call, whatever that is, and claim that as the overall, roundtrip latency.

Don’t fall for it!

This step they’re measuring could be anything from how long it takes to produce the first AI token to how long it takes to send your voice to the LLM. They could be measuring only network hops or STT pipeline steps, not end-to-end conversation delay. But in the real world, users feel the delay from the last word they speak to the first word they hear, and these providers are not giving you the true numbers measuring that full delay.

Let’s break down the myths around latency, the real measurements for best results, and the architecture that actually makes the fastest voice AI in the game.

How fast can voice AI respond?

Physics alone makes true sub-100ms roundtrip impossible today. Even human reaction times average more than that, and AI pipelines have to run speech-to-text, LLM, and text-to-speech before a single syllable reaches your ears.

Many voice AI agent solutions regularly deliver 2 to 3 seconds of delay roundtrip. The user finishes speaking, waits, waits, and then hears the AI talk. It’s awkward, robotic, and kills the illusion of real conversation.

You can try it yourself. Certain AI builders like LiveKit will tell you the latency, right inside the call. There, you’ll see the "overall" latency listed as something closer to 2 seconds.

What types of latency should you actually measure?

There’s no industry standard for measuring voice AI latency as of right now. Every provider will measure different parts of the pipeline, and most will be opaque about it. Here’s how we break it down at SignalWire:

1. LLM response latency

How fast does the LLM generate the first part of the text to speech reply after the user finishes speaking?

This first chunk represents the time from when the user finishes talking to when the first token is generated by the LLM. This shows how quickly the AI brain starts producing text. Some vendors may claim the time spent here… and stop measuring.

But this is only the start.

2. Utterance response latency

When does the AI finish thinking? This number represents how long until the AI figures out something to say, or time from when the user finishes talking to when the full first clause or coherent chunk of a sentence is ready to be spoken. This is what prevents half-words or awkward mid-sentence stalls.

This matters for clarity: if the AI hesitates mid-sentence, the illusion breaks.

3. Audio response latency

This is the real number that matters: how long from when the user stops talking until the audio response starts playing back to them.

This is the latency people actually notice. It’s what makes the AI feel responsive. Or not.

So if a voice AI agent provider is only representing LLM speed, they’re obscuring the problem.

To avoid being tricked by this, look for overall, or roundtrip latency. Often, you’ll find this number around 2-3 seconds at least… even for vendors advertising 200ms or less (which would be uncomfortably fast).

Roundtrip latency = end of your speech → audio playback. Anything less is partial accounting.

Other audio latency types

While these measurements are how we calculate latency at SignalWire, there are a few other sections of a call that providers could be measuring or terms they could be using to claim the lowest possible latency.

Network ping latency: Round-trip time for a small packet to travel to a server and back. This number could be anywhere from 10ms for a local link to 300ms for an intercontinental link. This is the absolute minimum delay every system builds on; while it affects every other latency, by itself tells you nothing about voice AI performance.

VoIP one-way audio latency: Time for audio to travel from speaker to listener in one direction. This could take from 20ms-400ms. This is mouth → ear, but only for one direction and without AI.

VoIP round-trip latency: Speech → listener → speech response (two-way path). Ranges from 100-600ms. This is still pre-AI, or classic voice call latency. In AI systems, it forms the transport backbone of total delay.

Turn-taking latency (conversational AI): Time from end of user speech to start of AI response. It’s the "gap" users feel in a voice AI conversation. Includes VAD, STT, TTS, and the media pipeline.

Time to first token: How long until the LLM produces its first output token, or one portion of turn-taking latency. 300–500 ms for average models.

Time to first byte: How long until the first chunk of synthesized audio is ready for playback.

End-to-end latency: This is the total real-world delay of when you talk to when you hear something back. Essentially, another term for the roundtrip number.

How does SignalWire reduce latency?

At SignalWire, we do things differently.

We’re the creators of FreeSWITCH, the telecom core that powers global communications, and we’ve been doing low-latency voice for two decades. Our AI voice agent infrastructure runs directly inside a unified media engine. No shuttling audio to random APIs. No extra hops.

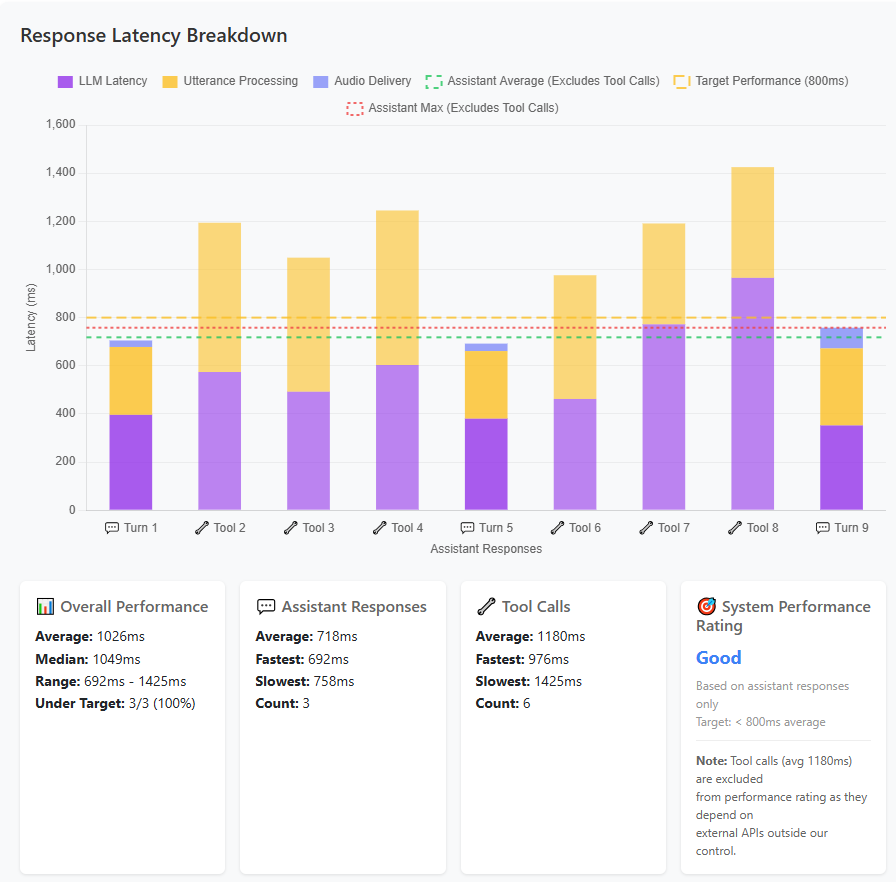

With this architecture, we deliver true 900-1500ms roundtrip latency. And when we say "roundtrip," we mean from end-of-speech to audio playback, not just how fast a model produces a token.

This is not theoretical. It's what our production systems actually deliver at scale.

SignalWire is not just another CPaaS vendor bolting AI on the side. We built our platform as a full implementation of Programmable Unified Communications (PUC), which gives us massive architectural advantages:

Native media stack

Audio routing, recording, TTS, and STT live inside the media engine

No external calls needed to analyze or synthesize audio

Embedded AI orchestration

LLM and TTS orchestration happen within the call context

AI agents can be spawned, stopped, and redirected without leaving the call

Real-time interruptibility

AI agents can barge, pause, retry, and rephrase mid-call

AI can maintain context and fluidity

Our secret: Transparent Barge

At the heart of SignalWire’s low-latency AI voice system is Transparent Barge, a proprietary real-time media strategy baked into our architecture. This is a core part of how our AI agents think and respond. The AI will wait for a user to finish talking without speaking over them, and any attempt the LLM makes to barge will be ignored.

Put simply, Transparent Barge is what lets our AI agents talk and think at the same time. Here’s how it works:

Live audio is streamed to the LLM every 250ms during speech.

If the user pauses, the AI begins generating a response.

If the user resumes speaking, the AI wipes its draft, glues together the old and new input, and restarts processing.

The result is interruptible, overlapping thought and speech, just like how humans talk. This eliminates the hard cutoffs and end-of-speech guessing that most systems struggle with.

This system ensures AI agents don’t lag behind the conversation. They’re always caught up and ready to reply the moment you’re done with a thought, or reprocess if you change your mind mid-sentence.

There’s no need to wait until a transcript is finalized. We listen smarter and faster.

Built for builders

CPaaS platforms aren’t designed for real-time audio control. They treat media as an "audio stream" feature, not as a living, controllable signal. So if you're building your AI agent on someone else's CPaaS, you're already behind.

With SignalWire, you can build voice agents using:

SignalWire Markup Language (SWML): our declarative scripting language

Agents SDK: deploy Python agents connected via SignalWire AI Gateway (SWAIG), our version of the MCP

WebRTC, SIP, PSTN: support softphones, mobile apps, and desk phones

Real-Time APIs: trigger workflows, integrations, transfers, and SMS mid-call

All with transparent latency tracking built in.

If it’s not under 1.5 seconds, it’s not real-time

When you’re building voice experiences that answer, help, route, or close, every millisecond counts. Your users feel the delay. And once they do, it’s over.

SignalWire is the only voice AI platform delivering true real-time interactions in as little as 900ms, because we built the infrastructure to do it.

Start building with SignalWire today and bring your voice AI agents to life without the lag. Create a free account to get started and join our developer community on Discord to get help if you need it.

Frequently asked questions

What is voice AI latency?

Voice AI latency is the total time between when a person stops speaking and when the AI starts responding. It includes network delay, speech-to-text processing, language model reasoning, and text-to-speech playback.

What causes delay in voice AI systems?

Latency in voice AI comes from multiple stages of the processing pipeline: audio capture and encoding, transmission to the server, STT conversion, LLM generation time, and TTS synthesis. Each step adds milliseconds that stack up to noticeable delay.

How much latency is normal for real-time voice AI?

Typical round-trip latency in most voice AI platforms is 2–3 seconds. A truly real-time system should respond under 1.5 seconds total, including speech recognition, reasoning, and audio playback.

Is sub-100 ms latency possible in voice AI?

No. Physics alone makes true sub-100 ms round-trip impossible. Even human reaction time averages around 250ms, and AI systems must perform several processing steps before generating speech.

How can I reduce latency in my voice AI application?

You can minimize latency by partnering with a provider who reduces network hops, uses low-latency transport like WebRTC, runs STT/LLM/TTS in the same environment, and streams partial results as they’re generated.