Structured prompts improve the reliability and maintainability of AI agents compared to freeform text blobs. The Prompt Object Model (POM) is a programmatic framework for composing, organizing, and rendering prompts using hierarchical sections, subsections, and rules, letting developers treat prompting like software architecture rather than unstructured text. By rendering consistently formatted prompt output (Markdown or JSON) from structured definitions, POM helps teams scale prompt engineering across agents, enforce business logic, and integrate prompts into workflows with traceability and version control.

Smarter Voice AI Prompt Engineering

If you're building anything that involves AI and telephony (voice AI agents, IVRs, virtual assistants) you’ve probably experienced the limitations of freeform prompts. The ones that start with “You are a helpful assistant…” and end with a list of behaviors you're hoping the LLM will follow.

This approach works fine for prototyping, or for static, chat-based LLMs, but quickly becomes unmanageable at scale. And for deploying real systems that handle sensitive tasks like bookings, support, ordering, or authentication, it falls apart fast.

At SignalWire, we’ve introduced a different method: structured prompting via the Prompt Object Model (POM). This is a programmatic framework for writing, managing, and executing structured LLM prompts that plug directly into the AI engine inside our Call Fabric platform.

This post breaks down what POM does for AI agents and why structured, code-driven prompting is essential for building real-world voice agents that work every single time.

Why traditional prompting fails

When we’re talking about voice AI agents, we’re really talking about building software that people are going to interact with in real time. In traditional prompt engineering, especially with tools like GPT or other LLMs, prompts are often just long paragraphs of text.

But what happens when the prompt of your real-world application is buried in some hardcoded block of text and you want to change just one part? Or test two versions? Or enforce a business rule like "never sell breakfast after 10:30 AM"?

Most LLM-based systems today lean on a monolithic prompt blob passed at runtime:

You are a drive-thru assistant. Suggest combos. Don’t let people order too many items. Offer upsells. Apply discounts.

This may produce the right response some of the time. But in real-time voice applications where users expect accuracy, speed, and consistency, they fail fast.

Why?

They’re non-deterministic (the LLM decides what matters)

They’re hard to reuse or version-control

They’re impossible to debug when things go wrong

Most developers end up re-engineering these bits of text across multiple places in their app, often resulting in inconsistent behavior, or breaking things entirely. And in a real-time voice application, these problems can become entire product failures, like what happened with Taco Bell’s AI.

When you’re handling live calls, authentication, reservations, or orders, vague prompts aren’t enough. You need software-grade structure.

SignalWire solves these issues with POM, a structured and programmable way to build, manage, and scale LLM prompts. You define your prompt like you would a function or a config file. Then the AI reads that prompt as structured logic, not as simple paragraphs.

What is POM?

SignalWire’s Prompt Object Model (POM) introduces a programmatic way to define prompts using structured sections, subsections, bullets, and rendering formats (Markdown or JSON) for full developer control. This prompt structure lets developers compose logical, layered instructions for LLMs like an outline or a decision tree.

With the SignalWire signalwire-pom package, you build prompts using real code, like this:

This renders to markdown like:

And you can keep nesting logic, structuring responses, and building reusable sections across your entire application. This gives you traceability, auditability, and modular design.

By separating behavior into sections, you can:

Easily reuse logic across multiple agents

Render prompts as markdown or JSON

Integrate structured data with AI execution logic

Conduct version control of individual instructions cleanly

Your AI prompt is now a software artifact with structure, history, and intent.

Why POM makes voice AI agents smarter

LLMs are useful tools, but give them vague instructions and you’ll get vague results. Having a poorly structured prompt can lead to inconsistent results, hallucinations, and the AI agent not following the instructions you provided.

With POM, developers tell the system what matters, and the system tells the LLM only what it needs to know. Nothing more.

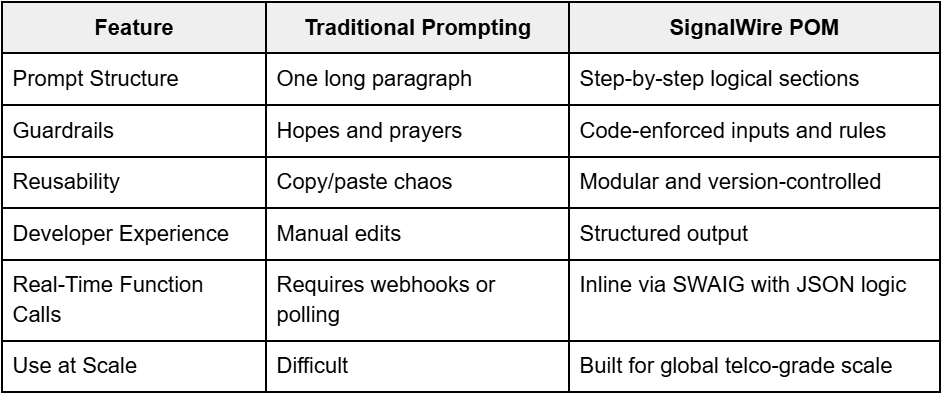

Structured prompting vs. traditional prompting

Let’s look at a real-world example, like a voice AI agent that takes orders at a fast food drive-through:

Traditional Prompt

This looks fine at first glance. But there’s no guardrails. The LLM can forget these instructions, override them, or just hallucinate based on tone.

Hard limits & validation

This enforces hard business rules and prevents abuse (like “I want 1,000 tacos”). There’s no room for the LLM to interpret or decide what to do. The logic is enforced in code, not in vague prompt suggestions.

Traditional prompts might ask the LLM “don’t let the user order more than 10 items.”

Here, we guarantee it programmatically.

Business logic & combo detection

Business logic kicks in to see if the current items match known combo deals. This can be dynamic (read from database or API). Output is used to inform the AI, but only after actual logic evaluates it.

Instead of telling the LLM “Remember to upsell combos,” you're giving it pre-validated opportunities. The model becomes a communicator, not a reasoner.

Pricing & calculation

Traditional prompting might ask: “Apply correct discounts and total the price.” That invites hallucination.

Here, we compute it in code, then feed the result back to the AI for presentation. It’s not left to the LLM to guess, and can be replaced with tax calculation, discount logic, etc.

LLM-friendly context generation

This code generates context for the LLM, not letting the model infer it from memory or vague prompts. This is the only part the LLM will “see” as part of its next prompt cycle. It’s a human-friendly message, but entirely generated from business rules, not model inference.

Structured response & event emission

Finally, this wraps the result so it can be returned into the voice AI agent’s call session.

swml_user_event emits a structured event that can be used in analytics, frontend updates, or triggering additional SWML logic.

This is what turns your voice AI agent from a chat app into a stateful application. The function doesn't just return text; it updates the app state.

Overall, with the SignalWire POM snippet, you’re not just "telling" the LLM what to do. You’re enforcing a structure around what it’s allowed to see and respond to. That’s the power of POM.

Use cases

Hotel reservations

Use code to validate room availability, enforce loyalty discounts, and never allow overbooking. The LLM only speaks when the system gives it validated info.

E-commerce returns

Build agents that follow return windows down to the minute, reference original purchase records, and enforce policy exceptions based on account tier.

Restaurant ordering

Create a drive-through agent that enforces menu rules, checks inventory in real-time, and never sells breakfast after 10:30am.

Prompt like a developer. Build smarter voice AI.

If you're still treating AI prompt engineering as a UX or copywriting task, you're doing it wrong. In any voice-driven application, prompting is part of the codebase, and it should be treated with the same rigor.

SignalWire's POM system, combined with params and functions, lets you design AI behavior like a developer instead of someone tweaking a prompt in ChatGPT. And that’s the difference between a working prototype and a production-grade voice assistant.

SignalWire’s AI Agents Python SDK is available to use today for developers building real-world voice apps that need structure, security, and low latency. To learn more about building real voice AI agent experiences with full control and developer sanity, bring your thoughts and questions to our community Discord.

Frequently asked questions

What is the Prompt Object Model (POM)?

The Prompt Object Model is a structured, code-friendly way to define AI prompts with sections, subsections, and bullets that can be rendered to Markdown or JSON, giving developers more control than freeform text prompts.

Why are traditional prompts problematic for real-world AI applications?

Freeform text prompts can be hard to debug, reuse, and version-control, and they may lead to inconsistent behavior and hallucinations when used in production-grade voice AI agents.

How does POM make prompt engineering more manageable?

POM lets developers build logical structures for prompts, isolate reusable sections, enforce formatting rules automatically, and integrate prompt definitions into code with better versioning and testing workflows.

What formats can POM render prompts into?

POM can render structured prompts into machine-readable JSON or human-friendly Markdown to support different engineering and runtime needs.

Why is structured prompting important for voice AI agents?

For real-time voice interactions and workflows such as bookings, ordering, or authentication, poorly structured prompts can fail unpredictably; POM enables reliable agent behavior by separating logic from text and treating prompts as modular code artifacts.