Secure payment processing over voice AI requires both convenience and compliance: your AI agents must never expose sensitive card or personally identifiable information (PII) while still enabling real-time payment flows. SignalWire’s approach demonstrates how to separate conversational logic from secure transaction handling — using DTMF collection and isolated payment channels — so credit card data never touches the AI model and remains PCI DSS compliant. This post explains key architectural principles, safe data collection patterns, and how to build your own secure AI-based payment agent.

How to Build AI Agents with PCI DSS Compliant Payment Processing

When building applications that accept payments over the phone, the stakes are high. Customers demand convenience, but businesses must guarantee security and compliance with regulations like PCI DSS.

At ClueCon 2025, Senior Escalation Engineer Shane Harrell demonstrated exactly how to achieve this with a SignalWire AI payment demo that shows developers how to create a secure AI payment agent from end to end.

The demo walks through how to use the Python Agents SDK to build a secure AI-powered payment workflow over the phone. It collects credit card numbers using DTMF tones and metadata, ensuring secure payment processing without exposing sensitive data to the AI model during the voice call.

This post explores how SignalWire’s approach to security keeps sensitive customer data safe and goes through some of the code snippets of Shane’s demo so you can build your own PCI-compliant AI payment system.

Why does secure AI payment processing matter?

AI is now an essential part of how businesses process customer interactions like transactions. But integrating payments into AI systems comes with serious risks. If your AI agent mishandles even one transaction, you risk exposing sensitive data and breaking compliance. A single leak of card data can permanently damage a company’s reputation.

Credit card numbers, CVVs, billing addresses, and personally identifiable information (PII) are valuable targets for fraud. LLMs aren’t designed to store PII, and unguarded prompts can accidentally log or repeat card data. Meanwhile, compliance requirements and regulations like PCI DSS in the U.S. mandate strict handling of payment data.

The solution is to architect AI agents so they never touch sensitive data directly. Instead of letting the LLM see or store payment data, Shane’s workshop demonstrates how to separate workflows so that sensitive information bypasses the AI entirely.

System architecture: Secure by design

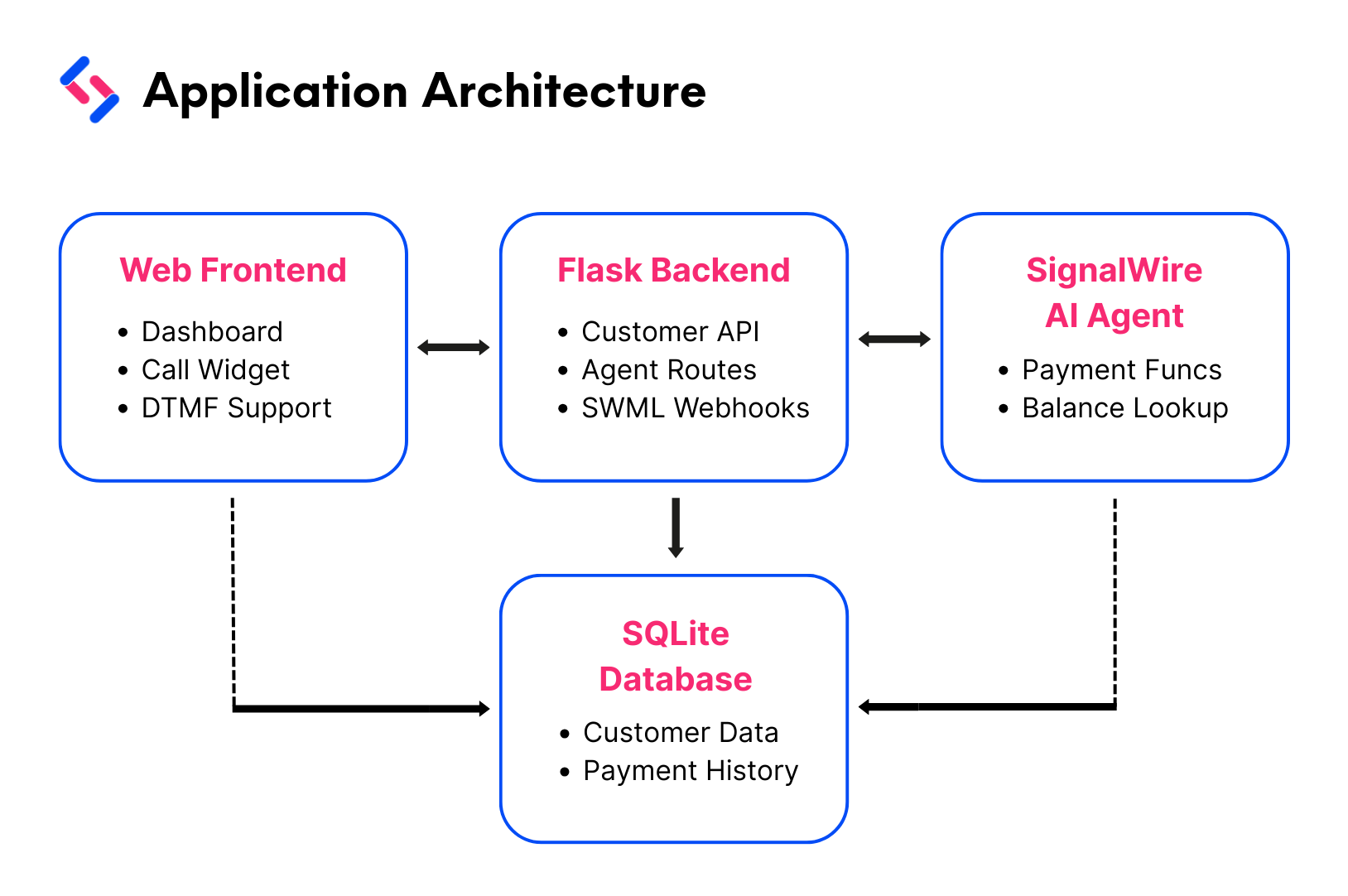

The architecture supporting this demo separates conversation (handled by the AI agent) from sensitive payment operations (handled by SignalWire Pay and DTMF collection). This ensures the AI runtime never touches cardholder data.

Key components

Flask backend (app.py): serves dashboard, manages customer accounts and data, routes calls

AI agent (atom_agent.py): handles dialog, executes SWAIG functions, manages secure payment flow

SQLite DB: stores customer accounts, balances, and transactions

Call widget: click-to-call from the dashboard, includes keypad for DTMF input, error handling, event handling, state management

SWML webhooks: dynamically updated via Ngrok tunnel

What are best practices for AI agent data collection?

This demo walks through how to prevent exposure of sensitive data to the AI models, use the proper encryption methods for voice and media streams, and verify customers with secure PINs. The result is a practical blueprint for secure AI-powered payment processing that’s developer-friendly and compliant.

Shane outlines several key principles for secure AI agent design:

Avoid collecting PII or payment data in the LLM

The AI should never directly ask for or store card numbers, PINs, or other sensitive fields.Use metadata & SignalWire AI Gateway (SWAIG) functions

Store and retrieve necessary details outside of the LLM using metadata and SWAIG (our version of the MCP) functions. This keeps the AI efficient while isolating sensitive data.Secure transactions with SWML pay

With the SWML pay method, transactions happen securely outside of the LLM over HTTPS. This method is adaptable to any payment processor (like Stripe or custom gateways). Payment entry happens in a walled-off, PCI-compliant channel, never inside the AI conversation.Add system prompts for guardrails

A strong system prompt keeps the AI on task and prevents it from accidentally leaking or repeating sensitive data.

Demo summary: From “quick and dangerous” to secure

To illustrate how these practices impact operations, Shane presented two live demos during his workshop at ClueCon. One was a quick version, and the other was a secure version of the same AI agent working for a fictional company, Max Electric, which lets customers log into a dashboard, view their balances, and pay bills securely.

Within the dashboard, customers use a click-to-call widget to launch the voice call where an AI agent then takes over the call flow to offer payment assistance. This dashboard also demonstrates integration with customer databases for balance lookups and payment processing.

On the surface, both demos result in an AI agent that collects a credit card payment over the phone. But under the hood, the difference is that one breaks PCI DSS compliance, and one is production-ready.

How the insecure (quick) demo fails compliance

In the first demo, the AI agent directly asks for credit card information, repeats it back for confirmation, and exposes details to the transcription channel. While functional, this flow is risky because:

No identity verification is performed

Metadata is not used, and everything passes through the LLM

The AI has direct access to sensitive customer information

Credit card data is exposed in logs and transcriptions

This flow works, and is fast to spin up, but is completely insecure. It passes payment details directly through the conversational AI model, meaning the AI can see and store card data, immediately breaking PCI DSS compliance.

The advanced (secure) example: Best practices in action

In the second demo, card data never touches the AI. Instead, DTMF tones are collected, and SWML pay handles the transaction. The AI only manages prompts and confirmations.

This shows the right way to build secure AI payment workflows with SignalWire. Here’s what it does differently than the initial demo:

Identity verification with metadata

Before payment, the customer is asked for a PIN. This PIN is never exposed to the AI. It is verified in the backend and returned as a yes/no response.

Metadata passing

Customer name, account number, and address are validated with backend functions. The AI only knows whether the checks passed, not the actual values.

SWML pay isolation

When it’s time to collect payment, the AI gracefully hands the customer off to SWML pay, a secure payment channel. At that moment, transcription stops, proof that the LLM is completely walled off.

DTMF input for PCI compliance

The customer enters their credit card details using DTMF tones (keypad input). This ensures the card data never touches the LLM, keeping the process PCI compliant.Simple agent feedback

Once the payment processor confirms success, the AI agent is only told “transaction successful.” It never sees card numbers, CVVs, or billing details. It does not repeat back the card number or any other sensitive information to the caller.

The result is a secure, PCI-compliant, low-latency flow that preserves customer trust while allowing automation.

Try the secure AI payment demo

View the repo for full instructions on how to launch a payment-processing AI agent. This example supports both Docker builds and local Python environments.

Make sure you have your SignalWire credentials ready and create a .env file from the provided env.sample template before starting. You’ll need Python 3.8+, Docker (optional but recommended), and an Ngrok Account (for local development tunneling).

If using Docker, simply clone the repository, configure environment variables, build and run with Make commands, and access the application.

If using a local Python environment, install dependencies, configure the environment, and run the application.

Start building AI agents today with SignalWire

This ClueCon 2025 demo combines metadata isolation, SWML pay, and strong prompt guardrails to show how developers can design secure AI payment systems that never expose sensitive card data to the LLM. If you’re interested in similar workshops and presentations, register for next year’s conference or subscribe to the FreeSWITCH YouTube channel to watch the live presentations from previous years.

Whether you’re prototyping a new voice AI workflow or scaling production-ready call flows, SignalWire gives you the programmable infrastructure to build applications that are both low-latency and secure.

If you’re ready to experiment, dive into the repo, spin up the advanced agent, and see how it works. Start building today and bring any questions to our community of developers on Discord.

Frequently asked questions

Why is secure payment processing important for voice AI agents?

Voice AI systems that handle payments face both technical and regulatory risks. Exposing card data to the AI or storing it insecurely can violate compliance and damage customer trust.

What is PCI DSS compliance and why does it matter?

The Payment Card Industry Data Security Standard (PCI DSS) defines security controls for handling credit card information. Systems that process payments must never expose cardholder data to unauthorized systems, including AI models.

How do you prevent sensitive data from reaching the AI model?

Design the workflow so that card numbers, CVVs, and other PII are captured via secure channels (e.g., DTMF or isolated APIs) and never passed into the model’s context or logs.

What role does DTMF input play in secure voice payments?

DTMF (keypad) input enables callers to enter sensitive payment details in a channel the AI does not access, ensuring compliance and privacy.

Can the AI still confirm successful payments without seeing card data?

Yes. The secure flow lets the AI receive only high-level status (e.g., “transaction successful”), so it can continue the conversation without ever handling sensitive details.